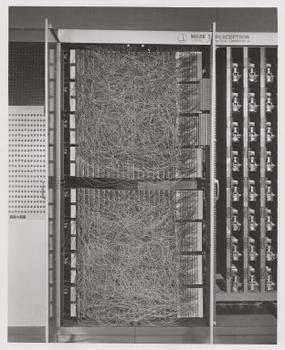

A common criticism of deep learning models is that they are 'black boxes'. You put data in one end as your inputs, the argument goes, and you get some predictions or results out the other end, but you have no idea why the model gave your those predictions.

This has something to do with how neural networks work: you often have many layers that are busy with the 'learning', and each successive layer may be able to interpret or recognise more features or greater levels of abstraction. In the above image, you can get a sense of how the earlier layers (on the left) are learning basic contour features and then these get abstracted together in more general face features and so on.

Some of this also has to do with the fact that when you train your model, you do so assuming that the model will be used on data that the model hasn't seen. In this (common) use case, it becomes a bit harder to say exactly why a certain prediction was made, though there are a lot of ways we can start to open up the black box.